A new vulnerability called LeftoverLocals has been discovered that allows attackers to steal sensitive data processed on graphics processing units (GPUs).

The vulnerability tracked by CVE-2023-4969 was uncovered by researchers at Trail of Bits and impacts GPUs from major vendors including Apple, AMD, and Qualcomm. The vulnerability specifically targets the local memory of GPUs, which is used to optimize the performance of applications like machine learning models.

By exploiting LeftoverLocals, attackers could potentially listen in on AI chatbots and reconstruct sensitive outputs.

What is LeftoverLocals Flaw?

LeftoverLocals is a vulnerability that allows one GPU application to access leftover data in the local memory from another application. This violates the expected isolation between different applications running on the same GPU hardware.

Local memory is a small, fast memory region located within the GPU and shared between threads running on the same compute unit. It acts as a software managed cache that developers can use to optimize performance by storing frequently reused data.

The researchers found that on vulnerable GPUs, local memory is not properly cleared between separate application executions (or GPU kernels). By repeatedly reading uninitialized local memory, a malicious application can effectively steal data from local memory used by other applications.

How Could This Vulnerability Be Exploited?

The researchers demonstrated how this vulnerability could be exploited to eavesdrop on large language models (LLMs) like chatbots. In a proof-of-concept attack, they showed how one application can listen in on the interactive session of an LLM chatbot running concurrently on the same GPU.

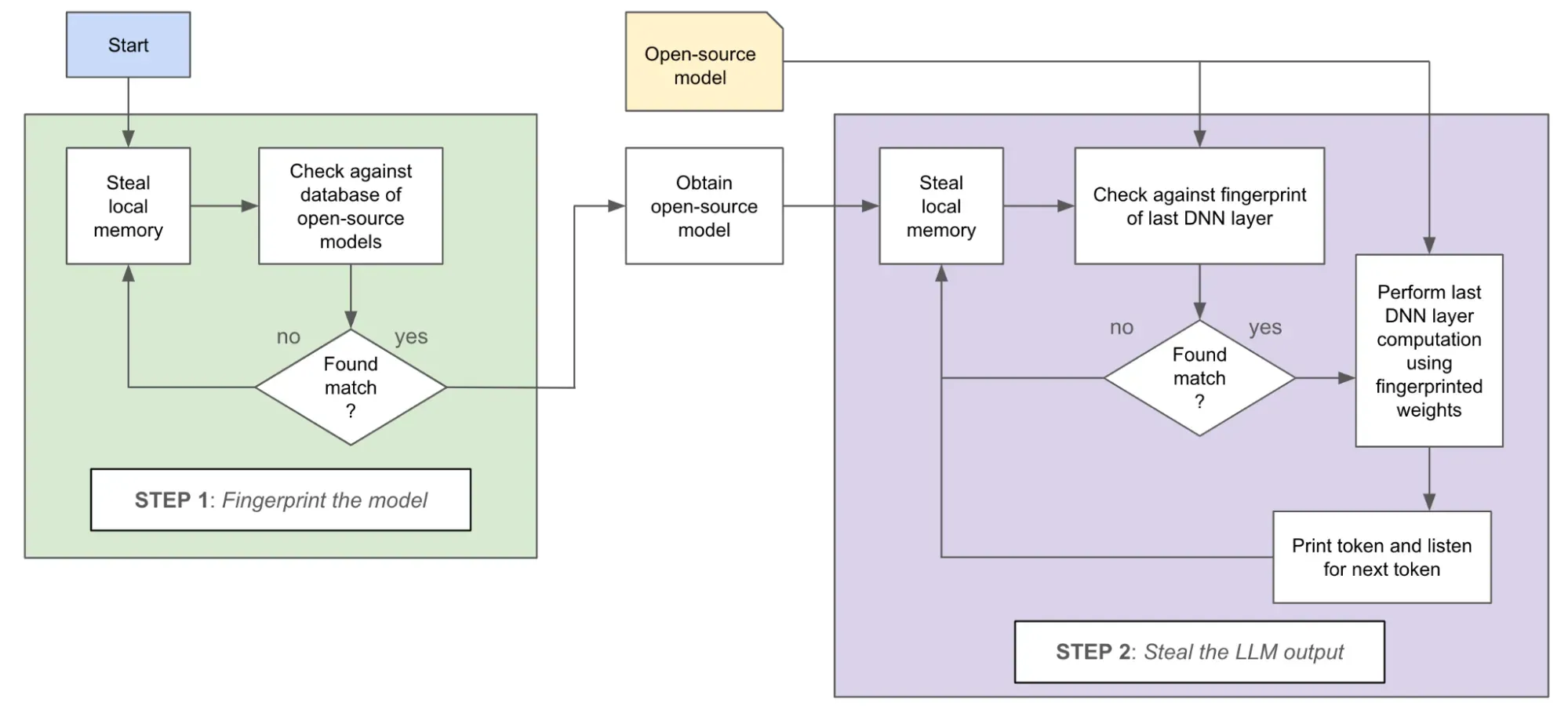

The attack works in two stages:

- Fingerprint the Model: By repeatedly reading local memory, the attacker can steal pieces of data from the LLM execution, including weights and activations. This is enough information to fingerprint which open-source model the victim is running.

- Listen to Outputs: The attacker focuses on stealing data from the output layer of the model. By stealing the input to this layer, which fits entirely in local memory, the attacker can reproduce the output and reconstruct the LLM's responses.

In tests, the attack was able to reconstruct LLM outputs with high accuracy, only introducing minor errors or artifacts. It was able to steal up to 181MB of data per query on an AMD GPU, enough to fully reproduce responses from a 7B parameter LLM model.

Why Does This Matter for AI Systems?

While the attack focused specifically on listening to LLM chatbots, the implications are much broader. The rise of AI, especially transformer models, has led to an explosion of applications powered by GPUs. The fact that GPUs can leak sensitive data between concurrent applications is highly concerning.

LLMs are especially prone to this attack because their linear algebra operations make extensive use of local memory for performance. However, any application using local memory could be at risk, including image processing, scientific computing, and graphics.

|

| Steps of the PoC exploit whereby an attacker process can uncover data to listen to another user’s interactive LLM session with high fidelity |

The vulnerability highlights that AI systems have unknown security risks when built on closed-source components like GPUs. Even open source models can be compromised by vulnerabilities in underlying hardware and systems software stacks. More rigorous security reviews are needed at all levels of the AI stack.

GPUs Are Impacted by LeftoverLocals?

The researchers tested GPUs from all major vendors using a variety of applications and frameworks like Vulkan, OpenCL, and Metal.

They observed the LeftoverLocals vulnerability on Apple, AMD, and Qualcomm GPUs, including both discrete and integrated mobile chips. Some examples of impacted devices include:

- AMD Radeon RX 7900 XT

- Apple M2 Chip

- Qualcomm Adreno GPUs

- Apple iPad Air 3

- MacBook Air M2

GPUs from NVIDIA, Intel, and Arm were not observed to be vulnerable in testing. Google also reported some Imagination GPUs are impacted, though Trail of Bits did not observe the issue themselves.

The amount of data leaked depends on the GPU architecture, with larger GPUs able to expose more memory. For example, the AMD Radeon 7900 XT was able to leak up to 5.5MB per kernel invocation.

Coordinated Disclosure and Mitigations

Trail of Bits has been working with impacted vendors and CERT/CC on coordinated disclosure since September 2022. Apple was the only vendor unresponsive during the process.

Some vendors have developed mitigations and patches:

- Qualcomm released an updated firmware that addresses LeftoverLocals for certain devices.

- Apple confirmed fixes for newer A17 and M3 series chips.

- Google updated ChromeOS and Android to include mitigations for AMD/Qualcomm GPUs.

However, many GPUs remain vulnerable at this time.

For users, potential mitigations include clearing local memory manually in GPU kernels or avoiding multi-tenant GPU environments. However, these require deep technical knowledge and may introduce performance overhead.

Looking Ahead at GPU Security

The researchers argue that stronger specifications, testing, and auditing are needed across the GPU ecosystem in light of vulnerabilities like LeftoverLocals. As GPUs become more widespread, including in privacy-sensitive domains like healthcare, their security implications cannot be ignored.

Khronos Group, the standards body behind GPU APIs like Vulkan and OpenCL, has been engaged in the disclosure process and is exploring actions to improve security in GPU standards. However, securing the diverse GPU ecosystem remains challenging long term.

The GPU security landscape is complex, with closed-source drivers and rapid evolutions in software and hardware stacks. Users currently lack visibility into security risks, and new attack surface areas are still being uncovered in these systems. Continued auditing by security experts will be key to hardening safety critical AI systems built on GPUs and other accelerators.